Technology trends such as: Artificial intelligence (AI) and machine learning (ML), 5G technology, Robotics, Blockchain, … are topics of great interest to many people. Let’s explore!

Top 10 future technology trends

1. Artificial intelligence (AI) and machine learning (ML)

Artificial Intelligence (AI) refers to the ability of machines and computer systems to perform tasks that would normally require human intelligence, such as visual perception, speech recognition, decision-making, and language translation.

AI is expected to play a major role in various industries and applications, such as healthcare, finance, customer service, and manufacturing, among others. There are various forms of AI, including narrow or weak AI, which is designed for a specific task, and general or strong AI, which has the ability to perform any intellectual task that a human can.

The development of AI is expected to bring both opportunities and challenges, such as job displacement and ethical concerns regarding the use of AI.

Machine Learning (ML) is a subset of Artificial Intelligence (AI) that enables systems to automatically learn and improve from experience without being explicitly programmed. ML algorithms use statistical models and algorithms to analyze and make predictions or decisions based on data. There are various types of ML, including supervised learning, unsupervised learning, semi-supervised learning, and reinforcement learning.

Supervised learning involves training a model on a labeled dataset, where the desired output is already known. This is used for tasks such as classification and regression.

Unsupervised learning involves training a model on an unlabeled dataset, where the desired output is unknown. This is used for tasks such as clustering and dimensionality reduction.

Semi-supervised learning is a combination of supervised and unsupervised learning, where the model is trained on a mixture of labeled and unlabeled data.

Reinforcement learning involves training an agent to make decisions by interacting with an environment and receiving feedback in the form of rewards or penalties.

ML is widely used in various industries and applications, such as natural language processing (NLP), computer vision, recommendation systems, and predictive maintenance, among others.

2. Edge computing

Edge computing refers to a distributed computing architecture where data processing and analysis occur at or near the source of the data, rather than in a centralized data center or the cloud. Edge computing is designed to address the limitations of traditional cloud computing, such as latency, bandwidth, and privacy concerns. By bringing computation and storage closer to the source of the data, edge computing can reduce latency and improve the speed and responsiveness of applications, especially for IoT devices and real-time applications.

Edge computing is expected to play a crucial role in various industries and applications, such as healthcare, industrial IoT, autonomous vehicles, and smart cities, among others. Edge computing will continue to evolve and become more widespread as the demand for real-time data processing and low-latency applications increases. However, edge computing also introduces new challenges, such as security, manageability, and the need for a new set of edge-specific tools and technologies.

3. 5G networks and Internet of Things (IoT)

5G is the fifth generation of mobile networks, designed to provide faster and more reliable wireless connectivity compared to previous generations. 5G networks offer significant improvements in terms of speed, latency, capacity, and reliability, making them suitable for a wide range of applications and use cases, such as connected cars, virtual and augmented reality, and the Internet of Things (IoT).

5G networks use a combination of new technologies and spectrum to achieve their performance improvements, including millimeter-wave frequencies, small cells, beamforming, and massive MIMO (multiple-input multiple-output). These technologies allow 5G networks to provide faster speeds and lower latency, as well as support a larger number of connected devices and applications.

The deployment of 5G networks is ongoing and is expected to continue in the coming years. As 5G networks become more widely available, they are expected to transform many industries and bring new opportunities for innovation and growth. However, the deployment of 5G networks is also facing some challenges, such as spectrum availability, network security, and the need for investment in new infrastructure.

The Internet of Things (IoT) refers to the growing network of physical devices, vehicles, home appliances, and other items that are embedded with sensors, software, and connectivity, allowing them to collect and exchange data over the internet. The IoT allows for the creation of smart and connected systems that can automate and optimize various processes, from manufacturing and supply chain management to energy consumption and home automation.

IoT devices can be connected to each other and to the internet, allowing for the exchange of data and the creation of new applications and services. IoT has the potential to bring numerous benefits, such as increased efficiency, improved safety, and new business opportunities. However, it also presents new challenges, such as security and privacy concerns, and the need for standardization and interoperability.

IoT is expected to continue growing and becoming more widespread in the coming years, as the number of connected devices increases, and new applications and services are developed. IoT will likely play a major role in the development of smart cities, smart homes, and Industry 4.0, among other areas.

4. Virtual and augmented reality (VR and AR)

Virtual Reality (VR) and Augmented Reality (AR) are two technologies that allow users to experience computer-generated environments and real-world environments, respectively, in a more immersive way.

Virtual Reality provides a fully immersive experience where users are fully immersed in a computer-generated environment, usually through the use of a VR headset. VR is used in a variety of applications, including gaming, simulation, and training, among others.

Augmented Reality, on the other hand, overlays digital information on the real world, usually through a smartphone or AR glasses. AR can be used for a wide range of applications, including education, entertainment, and industrial design, among others.

Both VR and AR are expected to continue growing and becoming more widespread in the coming years, as hardware becomes more advanced and accessible, and as new applications and services are developed. VR and AR have the potential to revolutionize various industries, including gaming, education, and marketing, among others. However, they also face some challenges, such as technical limitations and the need for more widespread adoption.

5. Cybersecurity and privacy

Cybersecurity refers to the protection of digital systems, networks, and data from unauthorized access, use, disclosure, disruption, modification, or destruction. It is a critical issue in today’s interconnected and digitized world, as cyber threats and attacks are becoming more frequent and sophisticated.

Privacy refers to the protection of personal information, such as name, address, Social Security number, and other sensitive information. Privacy is a fundamental right that is becoming increasingly important in a world where personal information is widely collected, stored, and shared by individuals, organizations, and governments.

Cybersecurity and privacy are closely related, as the protection of personal information often requires the implementation of strong cybersecurity measures. Both cybersecurity and privacy are crucial for the protection of individuals, organizations, and governments, as well as for the functioning of the digital economy and society.

As technology continues to advance and the use of digital systems and data becomes more widespread, cybersecurity and privacy will continue to be major challenges that require ongoing attention and investment. This includes the development of new technologies and policies to enhance security and privacy, as well as the education of users on how to protect themselves online.

6. Blockchain

Blockchain is a decentralized, distributed ledger that records transactions in a secure and transparent way. It uses cryptography to maintain the integrity and reliability of the data stored in the blockchain.

A blockchain network is composed of nodes that maintain a copy of the ledger and validate transactions. Once a transaction is validated and added to a block, it becomes a permanent part of the blockchain, creating a transparent and unalterable record of all transactions.

The most well-known application of blockchain technology is Bitcoin, a decentralized digital currency. However, blockchain has the potential to be used in a wide range of industries and applications, such as supply chain management, voting systems, digital identity, and more.

One of the key advantages of blockchain is its decentralization, which eliminates the need for intermediaries and increases transparency, security, and efficiency. However, the adoption of blockchain technology is still in its early stages and faces challenges such as scalability, interoperability, and regulatory hurdles.

Blockchain is expected to continue evolving and becoming more widespread in the coming years, as new use cases and applications are developed, and as the technology becomes more mature and widely adopted.

7. Quantum computing

Quantum computing is a type of computing that uses the principles of quantum mechanics to perform certain types of computation more efficiently than classical computers. Unlike classical computers, which use bits to represent information and perform operations, quantum computers use quantum bits, or qubits, which can represent and process multiple values simultaneously.

Quantum computing has the potential to revolutionize many fields, from cryptography and optimization to drug discovery and financial modeling. Some of the key advantages of quantum computing include its ability to solve certain problems much faster than classical computers, its ability to perform certain tasks that are infeasible for classical computers, and its ability to process large amounts of data simultaneously.

However, quantum computing is still in its early stages of development and faces many challenges, such as the need for large and complex systems, the difficulty of building and maintaining qubits, and the difficulty of programming and using quantum computers.

Despite these challenges, quantum computing is expected to continue advancing and becoming more widespread in the coming years, as new applications are developed and as the technology becomes more mature and accessible. This is likely to lead to new breakthroughs in many fields and a new era of computing.

8. Robotics and drones

Robotics and drones refer to the development and use of robots and unmanned aerial vehicles (UAVs), respectively.

Robotics is a field that involves the design, construction, operation, and use of robots. Robots can be used in a variety of applications, including manufacturing, healthcare, and space exploration, among others. The development of robotics has been driven by advances in technology, such as artificial intelligence and machine learning, which have enabled robots to become more capable, flexible, and autonomous.

Drones, also known as UAVs or unmanned aerial systems, are aircraft that are operated without a human pilot on board. Drones can be used in a wide range of applications, such as delivery, photography, agriculture, and military operations, among others. The development of drones has been driven by advances in technology, such as miniaturization, lightweight materials, and autonomous flight systems, which have enabled drones to become more affordable, versatile, and accessible.

Both robotics and drones are expected to continue growing and becoming more widespread in the coming years, as technology continues to advance, and as new applications and services are developed. Robotics and drones have the potential to revolutionize various industries, such as transportation, manufacturing, and agriculture, among others. However, they also face some challenges, such as safety, regulation, and ethical considerations.

9. Autonomous vehicles

Autonomous vehicles, also known as self-driving cars, are vehicles that are equipped with sensors, software, and other technologies that enable them to operate without a human driver. Autonomous vehicles use various technologies, such as artificial intelligence, computer vision, and GPS, to perceive their environment and make decisions about how to navigate the roads.

The development of autonomous vehicles has been driven by advances in technology, as well as a desire to increase road safety, reduce traffic congestion, and improve mobility for people who are unable to drive, such as the elderly and disabled. Autonomous vehicles have the potential to transform the way we live, work, and travel, as well as revolutionize industries such as transportation, delivery, and ridesharing, among others.

However, autonomous vehicles also face some challenges, such as regulatory, technical, and public acceptance issues. The development of autonomous vehicles requires significant investments in technology, infrastructure, and testing, and there are still many technical and ethical issues to be addressed before autonomous vehicles can be widely adopted.

Despite these challenges, the development of autonomous vehicles is expected to continue and accelerate in the coming years, as technology improves and as the benefits of autonomous vehicles become more apparent. Autonomous vehicles are likely to become increasingly widespread and integrated into our daily lives, leading to new opportunities and challenges in transportation, mobility, and beyond.

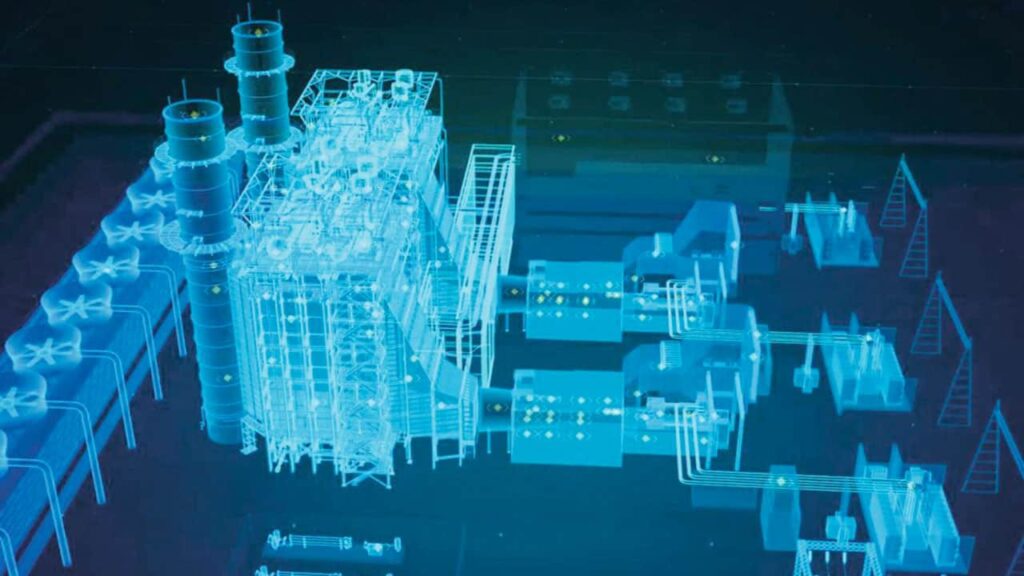

10. Digital twins and simulation

Digital twins and simulation refer to the use of digital representations and computer models to simulate and analyze real-world systems and processes.

A digital twin is a virtual representation of a physical asset, system, or process that can be used to simulate and analyze its behavior and performance. Digital twins are created using data from sensors, simulations, and other sources, and can be used to optimize operations, predict future behavior, and diagnose and solve problems.

Simulation is the process of creating a model of a system or process and using it to analyze and understand its behavior and performance. Simulation can be used in a wide range of applications, from product design and testing to training and decision-making.

The use of digital twins and simulation is growing, driven by advances in technology, such as the Internet of Things, artificial intelligence, and cloud computing, which are enabling the creation of more complex and accurate models. Digital twins and simulation have the potential to revolutionize various industries, such as manufacturing, healthcare, and energy, among others, by enabling organizations to optimize their operations, make better-informed decisions, and reduce risks and costs.

However, the use of digital twins and simulation also faces some challenges, such as the need for large amounts of data, the difficulty of creating accurate and comprehensive models, and the need for specialized skills and knowledge.

Despite these challenges, the use of digital twins and simulation is expected to continue to grow in the coming years, as technology continues to advance and as organizations seek to gain new insights and efficiencies through the use of these tools.

The countries leading technology trends

The countries that are leading technology trends and making significant contributions to the advancement of technology, include:

- United States: The US is home to some of the world’s largest technology companies, such as Apple, Google, and Amazon, as well as numerous universities and research institutions that are at the forefront of technological innovation. The US has a thriving technology industry, with a strong culture of entrepreneurship, research, and investment.

- China: China has become a major player in the technology industry in recent years, with numerous companies and startups developing cutting-edge technologies and products. China has a large and rapidly growing economy, and its government has made significant investments in technology and innovation.

- Japan: Japan has a long history of technological innovation, with a strong focus on electronics, robotics, and other advanced technologies. The country has a large and sophisticated technology industry, as well as numerous universities and research institutions that are leading the way in technological research and development.

- South Korea: South Korea is another country with a thriving technology industry, with companies such as Samsung and LG leading the way in areas such as electronics, mobile devices, and displays. The government of South Korea has made significant investments in technology and innovation, and the country has a strong culture of innovation and entrepreneurship.

- Europe: Europe is home to several countries that are leading technology trends, including the United Kingdom, Germany, France, and Sweden, among others. Europe has a strong technology industry, as well as numerous universities and research institutions that are at the forefront of technological innovation.

The companies leading technology trends

There are many technology companies that are leading technology trends and shaping the future of technology. Some of the most notable companies in this regard include:

- Apple: Apple is a leader in the development of innovative technology products, including smartphones, laptops, and other devices. The company has a reputation for creating well-designed, user-friendly products that set the standard for the technology industry.

- Amazon: Amazon is a leading technology company that is transforming the way people shop and interact with technology. The company has a wide range of products and services, including e-commerce, cloud computing, and artificial intelligence.

- Google: Google is a leading technology company that is at the forefront of many cutting-edge technologies, including artificial intelligence, cloud computing, and the Internet of Things. The company’s search engine and other products are used by millions of people around the world, and its research and development efforts are shaping the future of technology.

- Microsoft: Microsoft is a technology giant that is best known for its Windows operating system and Office software suite. However, the company is also at the forefront of many other technological innovations, including cloud computing, artificial intelligence, and the Internet of Things.

- Facebook: Facebook is one of the largest social media companies in the world, with billions of users around the globe. The company is also at the forefront of many cutting-edge technologies, including artificial intelligence, virtual and augmented reality, and blockchain.